Using autoencoders for video data labeling – Labeling Video Data

Autoencoders are a powerful class of neural networks widely used for unsupervised learning tasks, particularly in the field of deep learning. They are a fundamental tool in data representation and compression, and they have gained significant attention in various domains, including image and video data analysis. In this section, we will explore the concept of autoencoders, their architecture, and their applications in video data analysis and labeling.

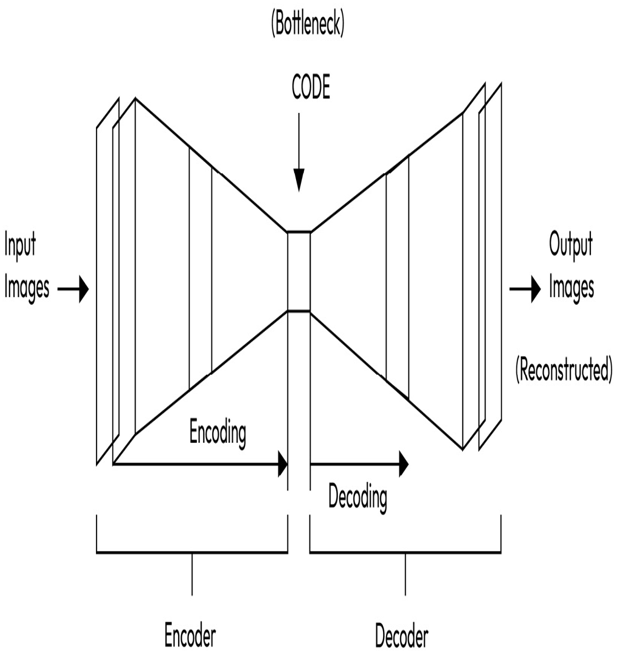

The basic idea behind autoencoders is to learn an efficient representation of data by encoding it into a lower-dimensional latent space and then reconstructing it from this representation. The encoder and decoder components of autoencoders work together to achieve this data compression and reconstruction process. The key components of an autoencoder include the activation functions, loss functions, and optimization algorithms used during training.

An autoencoder is an unsupervised learning model that learns to encode and decode data. It consists of two main components – an encoder and a decoder.

The encoder takes an input data sample, such as an image, and maps it to a lower-dimensional representation, also called a latent space or encoding. The purpose of the encoder is to capture the most important features or patterns in the input data. It compresses the data by reducing its dimensionality, typically to a lower-dimensional space.

Conversely, the decoder takes the encoded representation from the encoder and aims to reconstruct the original input data from this compressed representation. It learns to generate an output that closely resembles the original input. The objective of the decoder is to reverse the encoding process and recreate the input data as faithfully as possible.

The autoencoder is trained by comparing the reconstructed output with the original input, measuring the reconstruction error. The goal is to minimize this reconstruction error during training, which encourages the autoencoder to learn a compact and informative representation of the data.

The idea behind autoencoders is that by training the model to compress and then reconstruct the input data, it forces the model to learn a compressed representation that captures the most salient and important features of the data. In other words, it learns a compressed version of the data that retains the most relevant information. This can be useful for tasks such as data compression, denoising, and anomaly detection.

Figure 9.3 – An autoencoder network

Autoencoders can be used to label video data by first training the autoencoder to reconstruct the original input frames, and then using the learned representations to perform classification or clustering on the encoded frames.

Here are the steps you can follow to use autoencoders to label video data:

- Collect and preprocess the video data: This involves converting the videos into frames, resizing them, and normalizing pixel values to a common scale.

- Train the autoencoder: You can use a convolutional autoencoder to learn the underlying patterns in the video frames. The encoder network takes in a frame as input and produces a compressed representation of the frame, while the decoder network takes in the compressed representation and produces a reconstructed version of the original frame. The autoencoder is trained to minimize the difference between the original and reconstructed frames using a loss function, such as mean squared error.

- Encode the frames: Once the autoencoder is trained, you can use the encoder network to encode each frame in the video into a compressed representation.

- Perform classification or clustering: The encoded frames can now be used as input to a classification or clustering algorithm. For example, you can use a classifier such as a neural network to predict the label of the video, based on the encoded frames. Alternatively, you can use clustering algorithms such as k-means or hierarchical clustering to group similar frames together.

- Label the video: Once you have predicted the label or cluster for each frame in the video, you can assign a label to the entire video based on the majority label or cluster.

It’s important to note that autoencoders can be computationally expensive to train, especially on large datasets. It’s also important to choose the appropriate architecture and hyperparameters for your autoencoder based on your specific video data and labeling task.

Leave a Reply