A hands-on example to label video data using autoencoders – Labeling Video Data-1

Let’s see some example Python code to label the video data, using a sample dataset:

- Load and preprocess video data To begin, we will read the video files from a directory and extract the frames for each video. Then, when we have a dataset of labeled video frames. We will split the data into training and testing sets for evaluation purposes.

Let’s import the libraries and define the functions:

import cv2

import numpy as np

import cv2

import os

from tensorflow import keras

Let us write a function to load all video data from a directory:

Function to load all video data from a directory

def load_videos_from_directory(directory, max_frames=100

):

video_data = []

List all files in the directory

files = os.listdir(directory)

for file in files:

if file.endswith(“.mp4”):

video_path = os.path.join(directory, file)

frames = load_video(video_path, max_frames)

video_data.append(frames)

return np.concatenate(video_data)

Let us write a function to load each video data from a path:

Function to load video data from file path

def load_video(file_path, max_frames=100, frame_shape=(64, 64)):

cap = cv2.VideoCapture(file_path)

frames = []

frame_count = 0

while True:

ret, frame = cap.read()

if not ret or frame_count >= max_frames:

break

frame = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

frame = cv2.resize(frame, frame_shape)

frame = np.expand_dims(frame, axis=-1)

frames.append(frame / 255.0)

frame_count += 1

cap.release()

Pad or truncate frames to max_frames

frames = frames + [np.zeros_like(frames[0])] * (max_frames – len(frames))

return np.array(frames)

Now, let’s specify the directories and load the video data:

Specify the directories

brushing_directory = “/datasets/Ch9/Kinetics/autoencode/brushing”

dancing_directory = “/datasets/Ch9/Kinetics/autoencode/dance”

Load video data for “brushing”

brushing_data = load_videos_from_directory(brushing_directory)

Load video data for “dancing”

dancing_data = load_videos_from_directory(dancing_directory)

Find the minimum number of frames among all videos

min_frames = min(min(len(video) for video in brushing_data), min(len(video) for video in dancing_data))

Ensure all videos have the same number of frames

brushing_data = for video in brushing_data]

dancing_data = for video in dancing_data]

Reshape the data to have the correct input shape

Selecting the first instance from brushing_data for training and dancing_data for testing

train_data = brushing_data[0]

test_data = dancing_data[0]

Define the input shape based on the actual dimensions of the loaded video frames

input_shape= train_data.shape[1:]

print(“Input shape:”, input_shape)

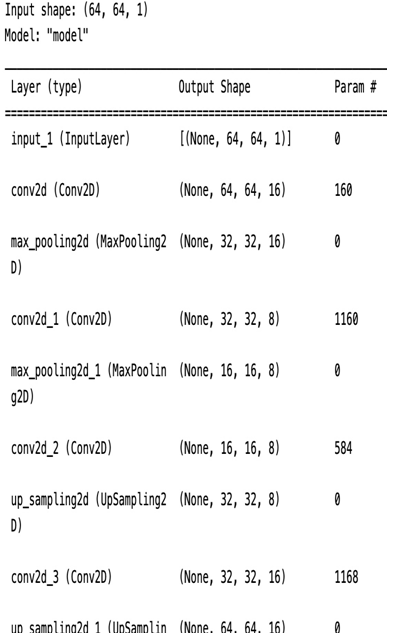

- Build the autoencoder model: In this step, we construct the architecture of the autoencoder model using TensorFlow and the Keras library. The autoencoder consists of an encoder and a decoder. The encoder part gradually reduces the spatial dimensions of the input frames through convolutional and max-pooling layers, capturing important features:

Define the encoder part of the autoencoder

encoder_input = keras.Input(shape=input_shape)

encoder = keras.layers.Conv2D(filters=16, kernel_size=3, \

activation=”relu”, padding=”same”)(encoder_input)

encoder = keras.layers.MaxPooling2D(pool_size=2)(encoder)

encoder = keras.layers.Conv2D(filters=8, kernel_size=3, \

activation=”relu”, padding=”same”)(encoder)

encoder = keras.layers.MaxPooling2D(pool_size=2)(encoder)

Define the decoder part of the autoencoder

decoder = keras.layers.Conv2D(filters=8, kernel_size=3, \

activation=”relu”, padding=”same”)(encoder)

decoder = keras.layers.UpSampling2D(size=2)(decoder)

decoder = keras.layers.Conv2D(filters=16, kernel_size=3, \

activation=”relu”, padding=”same”)(decoder)

decoder = keras.layers.UpSampling2D(size=2)(decoder)

Modify the last layer to have 1 filter (matching the number of channels in the input)

decoder_output = keras.layers.Conv2D(filters=1, kernel_size=3, \

activation=”sigmoid”, padding=”same”)(decoder)

Create the autoencoder model

autoencoder = keras.Model(encoder_input, decoder_output)

autoencoder.summary()

Here is the output:

Figure 9.4 – The model summary

- Compile and train the autoencoder model: Once the autoencoder model is constructed, we need to compile it with appropriate loss and optimizer functions before training. In this case, we will use the Adam optimizer, which is a popular choice for gradient-based optimization. The binary_crossentropy loss function is suitable for the binary classification task of reconstructing the input frames accurately. Finally, we will train the autoencoder on the training data for a specified number of epochs and a batch size of 32: Compile the model

autoencoder.compile(optimizer=”adam”, loss=”binary_crossentropy”)

Leave a Reply